Joint Correcting and Refinement for Balanced Low-Light Image Enhancement

IEEE Transactions on Multimedia

Nana Yu, Hong Shi, Yahong Han,

College of Intelligence and Computing, and Tianjin Key Lab of Machine Learning, Tianjin University

Abstract

Low-light image enhancement tasks demand an appropriate balance among brightness, color, and illumination. While existing methods often focus on one aspect of the image without considering how to pay attention to this balance, which will cause problems of color distortion and overexposure etc. This seriously affects both human visual perception and the performance of high-level visual models. In this work, a novel synergistic structure is proposed which can balance brightness, color, and illumination more effectively. Specifically, the proposed method, so-called Joint Correcting and Refinement Network (JCRNet), which mainly consists of three stages to balance brightness, color, and illumination of enhancement. Stage 1: we utilize a basic encoder-decoder and local supervision mechanism to extract local information and more comprehensive details for enhancement. Stage 2: cross-stage feature transmission and spatial feature transformation further facilitate color correction and feature refinement. Stage 3: we employ a dynamic illumination adjustment approach to embed residuals between predicted and ground truth images into the model, adaptively adjusting illumination balance. Extensive experiments demonstrate that the proposed method exhibits comprehensive performance advantages over 21 state-of-the-art methods on 9 benchmark datasets. Furthermore, a more persuasive experiment has been conducted to validate our approach the effectiveness in downstream visual tasks (e.g., saliency detection). Compared to several enhancement models, the proposed method effectively improves the segmentation results and quantitative metrics of saliency detection.

Overall architecture of the Joint Correcting and Refinement Network

|

|---|

Fig. 1: Details of the JCRNet architecture. Specifically, the proposed JCRNet consists of three stages: feature extraction stage (FES), joint refinement stage (JRS), and illumination adjustment stage (IAS). The three stages work together to enhance low-light images, thereby balancing brightness, color, and exposure.

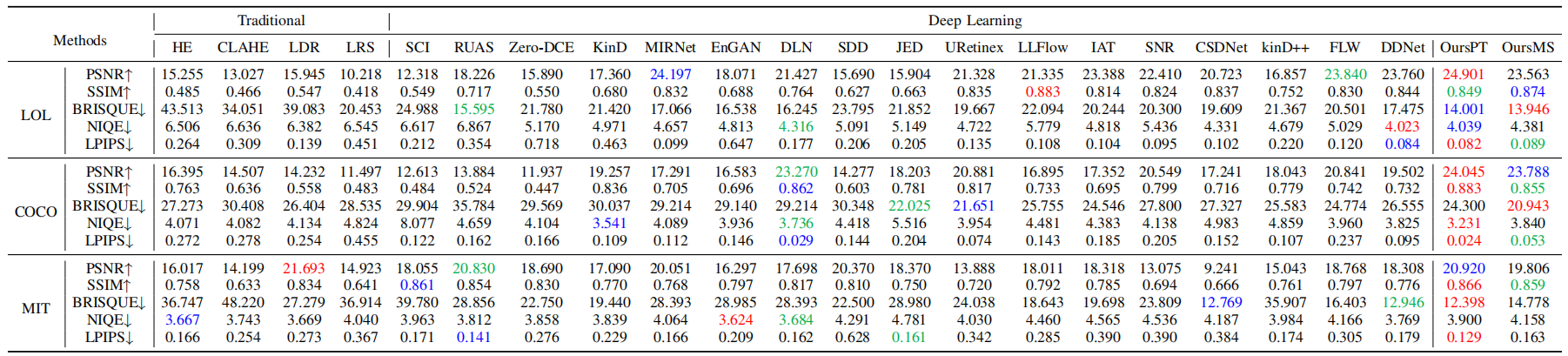

Quantitative Evaluation

|

|---|

Table. 1: Average performance comparison between multiple method and the proposed method enhancement on LOL, COCO and MIT datasets. OursPT is based on the PyTorch framework, while OursMS is based on the MindSpore framework. The best, second-best, and third-best scores are represented by red, blue, and green respectively.

|

|---|

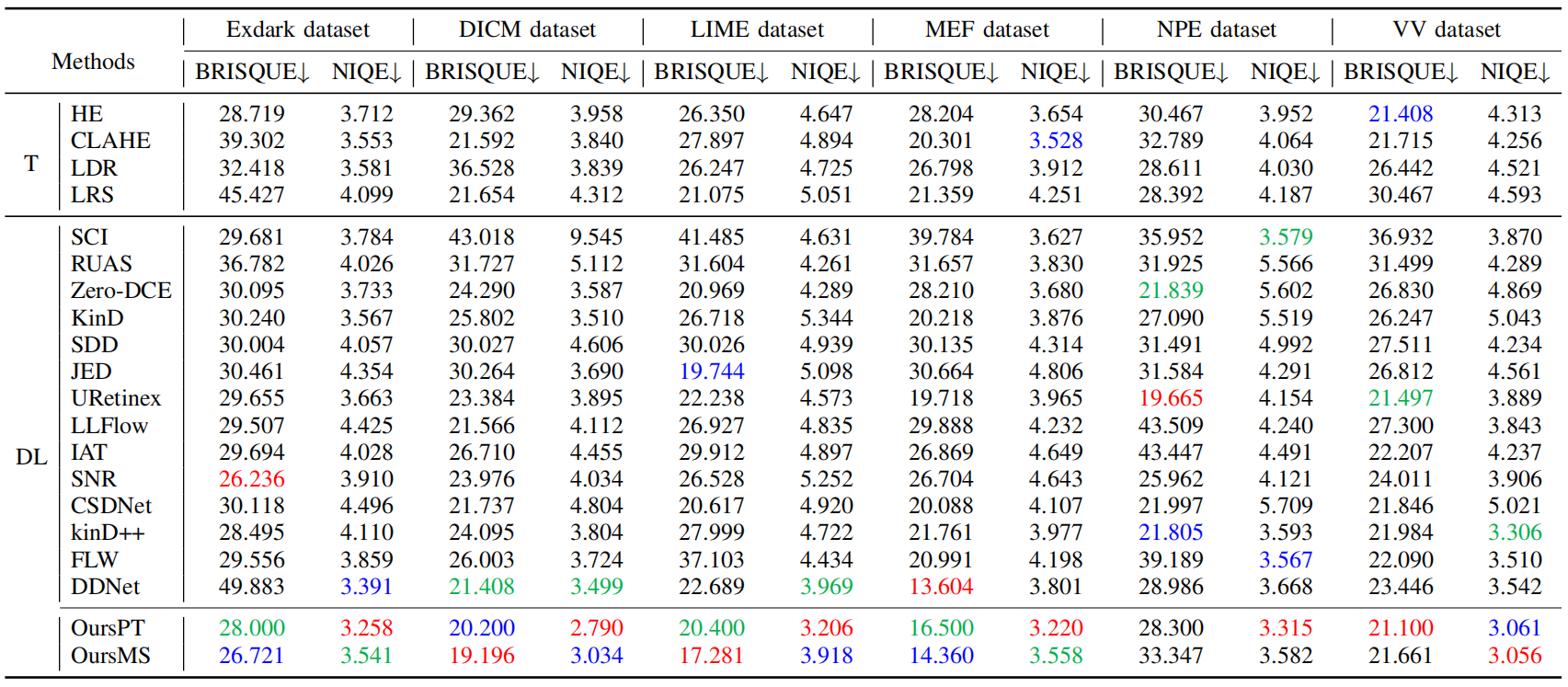

Table. 2: Average performance comparison between multiple method and the proposed method enhancement on multiple no-reference dataset. OursPT is based on the PyTorch framework, while OursMS is based on the MindSpore framework. The best, second-best, and third-best scores are represented by red, blue, and green respectively. (T: Traditional methods. DL: Deep learning)

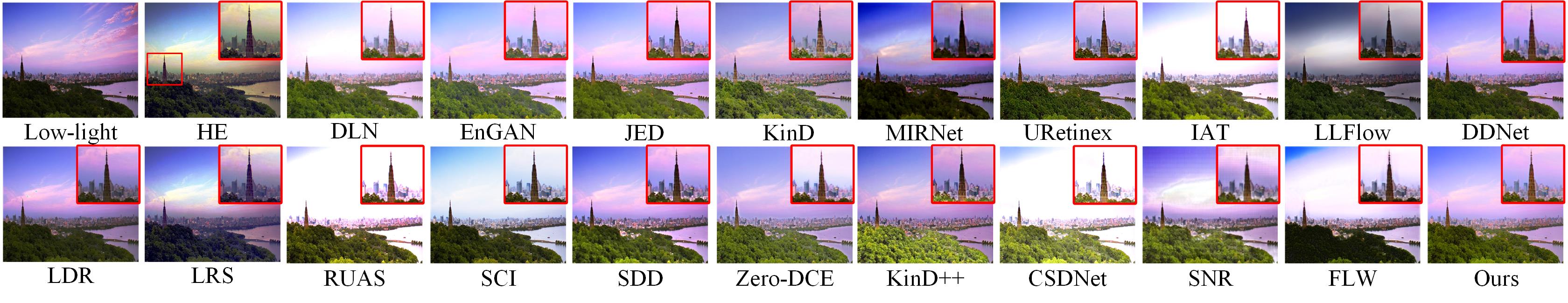

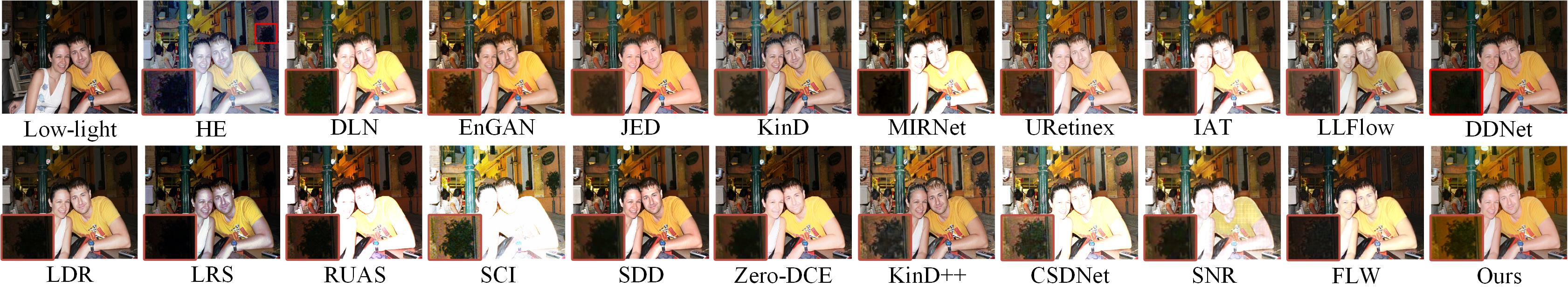

Qualitative Evaluation

|

|---|

Fig. 2: Visualizing the results of the LOL dataset. For clarity, the magnified parts of the images are displayed.

|

|---|

Fig. 3: Visualizing the results of the COCO dataset. For a clear comparison of the visual effects, the error images between the ground-truth image and each enhancement result are shown in the lower left corner.

|

|---|

Fig. 4: Visualizing the results of the NPE dataset. For clarity, the magnified parts of the images are displayed.

|

|---|

Fig. 5: Visualizing the results of the VV dataset. For clarity, the magnified parts of the images are displayed.

|

|---|

Fig. 6: Visualizing the results of the Exdark dataset. For clarity, the magnified parts of the images are displayed.

|

|---|

Fig. 7: Visualizing the results of the MEF dataset. For clarity, the magnified parts of the images are displayed.

Citation

If you find this useful in your work, please consider citing the following reference:

@Article{JCRNet,

author = {Nana Yu, Hong Shi, Yahong Han},

title = {Joint Correcting and Refinement for Balanced Low-Light Image Enhancement},

journal = {IEEE Transaction on Multimedia},

year = {2023},

doi = {10.1109/TMM.2023.3348333}

}

Any question regarding this work can be addressed to yunana@tju.edu.cn.